From the very beginning of image generation using artificial intelligence, LAB7’s creative director Samuel Tétreault envisioned the creative potential of this technology used in a dialogue with live performance and human movement. We went through several iterations to explore generative AI tools as they were evolving, to bring this vision to life.

For our first exploration in 2023, we simply used artificial intelligence to transform pre-recorded videos, confirming the creative potential of our concept. Then one of our interns, a computer engineer graduating student, built a first prototype of a solution to create a “live” interaction between human movement and generative AI. This pipeline combined a video camera, silhouette cropping, and searching an image bank that had been generated beforehand.

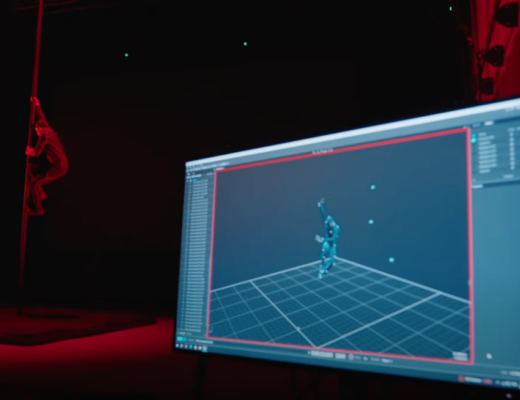

The second iteration of the project, in the summer of 2024, replaced the video camera with a Kinect camera and relied on the integration of Stable Diffusion into Comfy UI. Under the creative direction of Samuel Tétreault, we created several visual recipes and invited acrobatic dancers to bring them to life. This prototype was presented at Forum LAB7 in November 2024 and then at the E-AI conference in February 2025. The system could process 10 images per second, which resulted in a slightly jerky rendering and influenced certain artistic choices, particularly in terms of the rhythm of the performance.

In February 2025, we took advantage of the integration of Stable Diffusion into the VVVV software to further develop our concept and achieve greater visual fluidity. We used this new pipeline for the opening ceremony of Hannover Messe, the world’s largest industrial technology trade fair, with a performance directed by the 7 Fingers artistic codirector Patrick Léonard, in collaboration with the formidable Toronto-based Indigenous company Red Sky Performance and our sister company Supply + Demand.

Finally, we perfected the latest version of our prototype during a creative lab in July 2025, funded by the City of Montreal. Samuel Tétreault worked hand in hand with digital artist Raphaël Dupont, from our sister company Supply + Demand, to create a dozen visual recipes that apply AI image generation to compositions combining the artists’ silhouettes with animated 3D objects and particles. We also worked on visual compositions to experiment with the potential of the multi-screen immersive space in which the residency’s final presentation took place, with visuals brought to life by four acrobat-dancers. Both this presentation and a shorter version showcased at HUB Montréal in October 2025 were a great success and left our audience of professionals admirative of our excellent aesthetic and technical proposition.

Throughout this project, we conducted numerous tests with our research partner, the HUPR Center, for example to test the device with more complex circus disciplines, accessories, and other capture methods. SAT also created a user-friendly interface integrated in their open-source solution Ossia, so creators can easily explore and create with generative AI and human movements. Now that our prototype has proven its potential, we can use it in a performance context, with acrobats and dancers, but also as an interactive installation available to the public. And, why not, soon in a show!

We would like to thank Ville de Montréal and our partners for their support.

No Comments